Sara Oliver G.V.

Associate Creative Director

Looking back to the past year, we can see how voice technology is exponentially growing. Spending time at home, the lack of communication with the outside world, and the rise of our internet usage are a few elements influencing the adoption speed. As Brian Roemmele (president of Multiplex) said, the Voice-First sector will continue to be the fastest adopted technology in history.

In 2020, we witnessed considerable progress getting us closer to have more natural conversations with our AI assistants. Amazon's features that support multi-value slots, or multiple turns of interactions and conversation, are just a couple of examples moving towards this direction.

We found ourselves looking for entertainment within the home as it is very little we can do outside. The pandemic has created more substantial expectations for digital experiences (Fjord Trends 2021) along with safer and touchless interactions.

We’ve seen a significant rise in the number of smart devices surrounding us: phones, tablets, speakers, TVs, robots, vacuum cleaners, lights, air quality monitors, alarms, thermostats, wearables that track our health, game stations, VR headsets, and many more. Our lives are full of across-device moments.

As users, haptic interactions were our only way to convey our intents to technology. Then, visuals came into the panorama with the first screens. We feel comfortable with haptics and visuals. In fact, visual and haptic navigations are very natural and intuitive to us. For a while, they were our only modes until Voice came in, changing the picture radically.

When the first speakers with Conversational Assistants came out, we saw the speaker as the place to get in touch with them. In a way, it was like our AI friends were living only inside the speaker. Then, voice-enabled interactions began to be integrated into more and more products like phones, earbuds, smartwatches, or even refrigerators. This helps us experience the benefits and time efficiency of voice interactions, watching voice increasingly replace touch. Voice interaction is no longer only a product from Amazon or Google, but a mode/interface in itself. And we now perceive it like that.

Picture yourself saying to your phone: “Hey Assistant, write Oana on Whatsapp; I am on my way.” This would save you a fair amount of time, right?

Our voice assistants’ main challenge is in its user experience – this is the software, not the device in which we can find them, the hardware. Then, (because of the advantages of this new mode) it only makes sense that we keep working to integrate voice all over our ecosystems. More specifically, in-app search and navigation as voice commands are faster and more intuitive than clicking or typing.

At a recent Clubhouse event with Danny Bernstein (Managing Director, Partnerships for Google Assistant) and Brett Kinsella (Founder and CEO of Voicebot.ai), Danny disclosed that this year’s Google Assistant strategy is to integrate the voice assistant completely into the phone ecosystem. The idea is that you will be able to open apps through voice commands and have in-app control. For Google, this is In-App Actions, but in fact, it is deep linking, and it is the core way to make our assistants more helpful.

The current experience does not allow users to understand what functions our voice assistants can support fully. But, If we achieve to translate haptic commands into voice interface navigation commands, we will enhance the assistant’s experience.

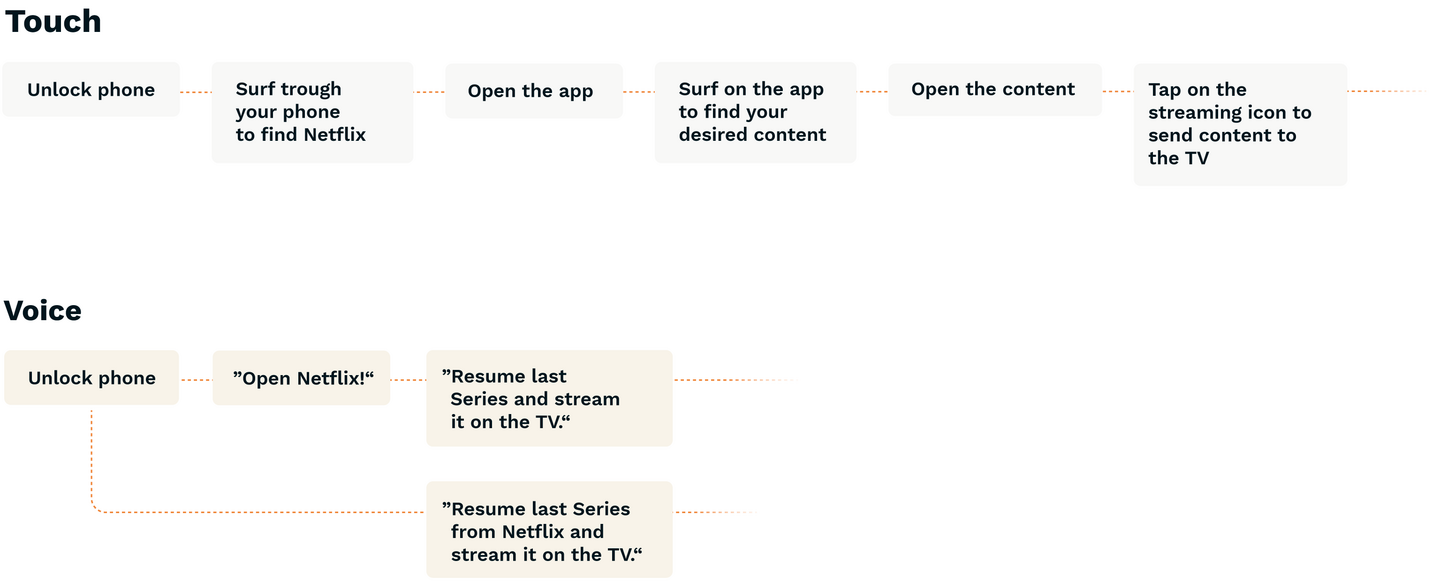

Imagine, instead of navigating through your phone, opening Netflix, searching for the TV show you were watching, and clicking on the streaming button to send it to the TV. You now would be able to say to your phone: “Hey Assistant, resume the last TV show I was watching on Netflix on the TV”.

We now go from toggling between different devices to simply asking what you need to the assistant. Translating haptic movements into voice commands is about using what the user already knows how to do into words. The tech industry is already there, and our focus as conversational designers is creating frictionless and effective commands and customer experiences.

Voice, touch, and visual inputs and outputs are modes of communicating and receiving information with our devices. This is called multimodality. Multidevice is the communication between devices. #Voiceonly inputs and outputs limit our experience. Instead, if you use voice as another channel that supports other modes, the chances of successfully interacting with your technology increases.

/ Sara Oliver G.V. / UX Designer at VUI.agency

We talked before about how we are used to haptics and visuals. Following that line, think about how the smartphone has become an extension of our body. The device follows us everywhere, and we can control almost everything through it. The smartphone has become a full remote control, especially in our smart homes.

Services that allow sending content from the phone or tablet to other devices through a Wifi network or Bluetooth experience great success. (Ex. Spotify Connect, Airplay, Chromecast). Look for instance at the new concept Samsung has launched for their new Smart TVs. TV and smartphone are projected to complement each other now. The TV is not a TV anymore, as the phone is not only about receiving and calling. Every single device we own is now a portal into the internet.

Multimodality and Multidevice are exciting, and it is helpful for your company. It elevates the user experience to another level of engagement and immersion. But designing for it is not an easy task and not a one-person job. You need a devoted and multidisciplinary team. A team of User Experience designers, graphic designers, linguists, and developers working all together to coordinate, curate, and create the best integration and interaction your customers need.

The voice industry was already growing fast before the pandemic, but the current situation has only accelerated that growth. Voice is that new element that makes us re-explore our daily environment.

At the moment, what we need is to design a better control of our systems and better customer experiences. This year, we have to focus on successfully integrating our assistants through our devices (phone, home gadgets, automotive systems, and wearables) to provide a frictionless multimodal and multidevice experience.

Nevertheless, we have to improve the core experiences of our assistants to augment the reliability and trust in their core functions. This year is about focusing on improving specific use cases and their consistency.

Let’s get together to build better voice, multimodal, and multidevice end-to-end experiences for your users. Feel free to drop us a message with your ideas, or subscribe to our newsletter to stay updated on voice, multimodality, and other trends.