Mohamed Hassan

VP Design & CX

We took part in the Chatbot Africa & Conversational AI Summit 2021 and this is a follow up on our talk: “Contextual voice experiences.”

There was a surprising amount of engagement and interest in the summit. It was interesting to connect with voice experts from the African region. Chatbots and virtual assistants are having a moment right now, transforming millions of businesses all over the world, and accompanying us in our everyday lives from our phones and smart speakers.

During the conference, the talks regarding the challenges of connectivity, language support, and localization were headliners. It was a pleasant surprise to see that the keywords throughout the presentations were inclusivity, and the AI diversity challenge, which is also one of the main tech challenges we need to solve quickly, a fact clearly pointed out by the Standford University 2021 AI Trends report.

Furthermore, if we want to create intelligent assistants, we need to understand the general intelligence problem by including everyone. Everyone means literally everyone. It is still a big challenge for nowadays voice assistants to accommodate more languages, dialects, and even cultural concepts.

Although voice assistants today can talk properly in some languages, they still can’t fully understand what we – including people with disabilities – say most of the time or when kids use their more simpler vocabulary. AI had this language understanding challenge for quite a long time. Maybe they are missing a totally different component of understanding.

Or let’s say that they are missing a component of intelligence as this Facebook research suggests:

“If AI systems can glean a deeper, more nuanced understanding of reality beyond what’s specified in the training data set, they’ll be more useful and ultimately bring AI closer to human-level intelligence.”

Since people use language to communicate, the learning curve to use voice assistants shouldn’t be that long. Talking to a voice assistant will immediately reveal its limitations. Can it understand the difference between “here” and “there”? What does that mean spatially? Is there a relationship between them?

After all, at the end of the day, we can’t label everything in the world. Common sense and context recognition are concepts that need to exist in an intelligent voice assistant. Common sense helps humans accomplish new tasks with mental models where they don’t need that much training. Imagine what you can do as a child versus as an adult. This is where the “Contextual” factor in voice experiences comes from and this is my current research focus in my Ph.D. studies.

Let’s have a look at the already available features that help voice assistants be smarter and understand more context.

Currently voice assistants – in the market – can make use of the following data:

It helps not only with training purposes but also with deciding on probabilities of what might the users ask next.

It helps with customizing dialogues according to age and preferences.

It helps with improving the proactiveness of voice assistants and also with increasing user satisfaction, and long term usage.

It helps with understanding the level of engagement and drop-outs

It helps with the decision on which devices or services to start/continue the experience on.

It helps with adjusting the dialogues according to mood/emotions and even understanding the intention. (There is a difference between saying “I need daddy” in a low slow tone versus a high loud tone. The latter might indicate an emergency request)

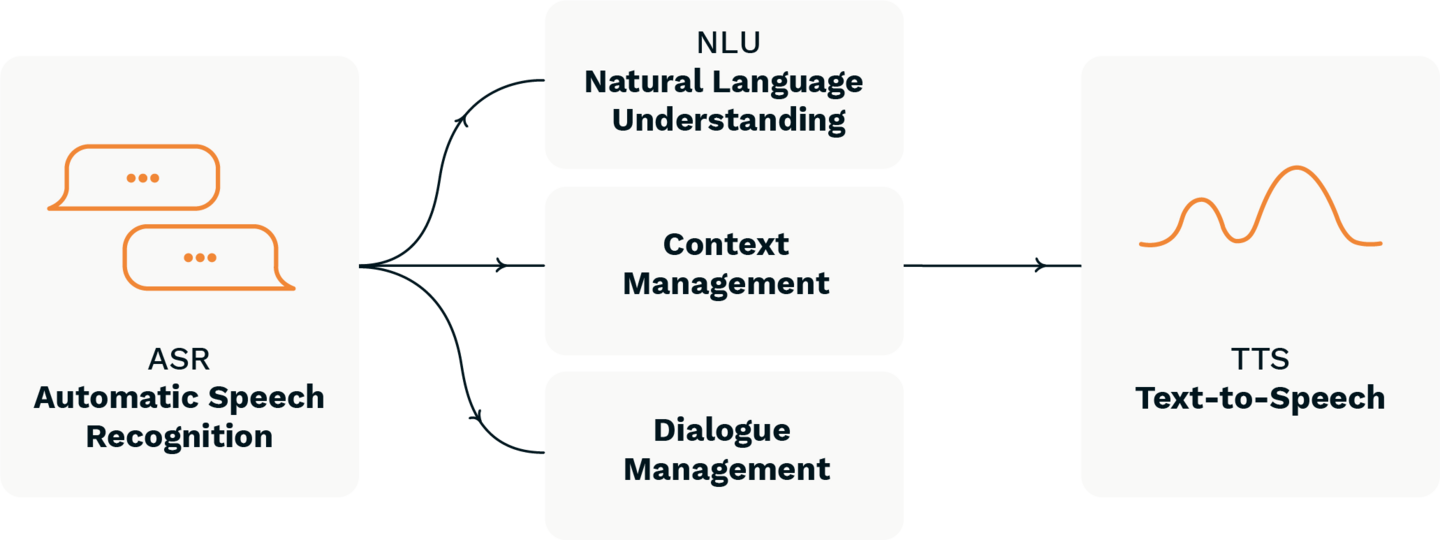

However, we need more efforts that build on top of previous features. We need common sense or a context management component in the technology behind voice assistants.

The AI research today has an interest in modeling common sense and enabling the machine to learn ley concepts by itself. Self-supervised learning is a key aspect to unlock that “Context Management” component in voice tech.

One important benefit from that component is that the voice assistant will understand the relationship between what has been said before in the conversation history and draw assumptions which will lead to useful conclusions on the actual intention behind a new request. In other words, “I need Daddy” will mean “Please tell daddy to come to me” (Based on our assumption on the few seconds we saw in that video).

The relationship between people or objects in a home setting is very important to augment the voice assistant’s ability to make decisions i.e. respond.

This relationship helps voice assistants to understand the difference between “here” and “there” or “inside” and “outside” or even the meaning behind a “living room” and “bedroom” and which activities and usage take place in a normal day or location of furniture and devices in these rooms.

A question like: “Does it make sense to light all of bedroom lights at 3 a.m. while people are sleeping inside?” could be easily answered by a voice assistant that has been given all required parameters.

With that level of understanding, we can have a voice assistant that can really offer assistance and even maybe intelligence beyond the mainstream command-and-control type of assistants.

An assistant that awaits to be addressed and commanded to directly fulfil the user’s intentions. A conversation with this assistant can result in a dialogue. (If you ask for a recipe, it will show you a recipe)

An assistant that can offer recommendation when asked to fulfil directly and indirectly the user’s intentions. A conversation with this assistant can result in a dialogue. (If you ask for a recipe, it will show you a recipe and advise you to buy missing ingredients if necessary)

An assistant that can work with users to formulate intentions and then fulfil them in a cooperative style. A conversation with this assistant can result in a dialogue or debate. (If you ask for a recipe, it will show you a recipe and advise you to buy missing ingredients if necessary, then start cooking with you e.g. Turning on the oven)

An assistant that can do self-governance and act on its own to fulfill preprogrammed user’s intentions. A conversation with this assistant can result in a dialogue, debate, discourse, or diatribe. (If you ask for a recipe, it will just start cooking)

If we look closely, we will find out that context is needed more and more when we go from the 1st to the 4th archetype. There is also a correlation with having agency. The more we go up into the archetypes, the more we lose agency to our actions. We lose detailed control over the voice assistant. The voice assistant becomes more than an obedient agent for us, it might become “us”. (We will talk more about that in another blog)

Voice is a very personal interface, it is the closest interface between humans and machines due to the fact that it is using “our” language hence our way of thinking.

So, the design process for a voice experience needs to include contextual inquiry. We need to start integrating environmental and physical model into our contextual inquiry (Holtzblatt et al, 1998). That will help us understand the macro and micro levels of voice interactions and define the needed context parameters.

Secondly, it is a technical requirement, and the current voice technology and solutions need to include a context management system and recognition that developers can use. Developments in self-learning algorithms are necessary to enable that.

At VUI.agency, we take contextual information into account when we design voice experiences. Starting from the inquiry phase until testing, the user’s context and the situation are at the center of our process. We try to push the limits of technology to achieve a smarter and more assistive voice assistant.

If you want to have smart assistants that help in our everyday lives and eliminate our frustrations, we need to invest more in new innovative frameworks and technologies.

Finally, businesses and brands should focus more on exploring new grounds for voice and surpass the current technical limitations.

Contact us if you want to know more about context in voice.