It is unavoidable that we all are biased. We each grew up in unique conditions. We speak certain languages, have a particular cultural background. We have met people and are shaped by diverse experiences or the absence of them. We take our socialization with us wherever we go. And we do not leave it in front of the workplace.

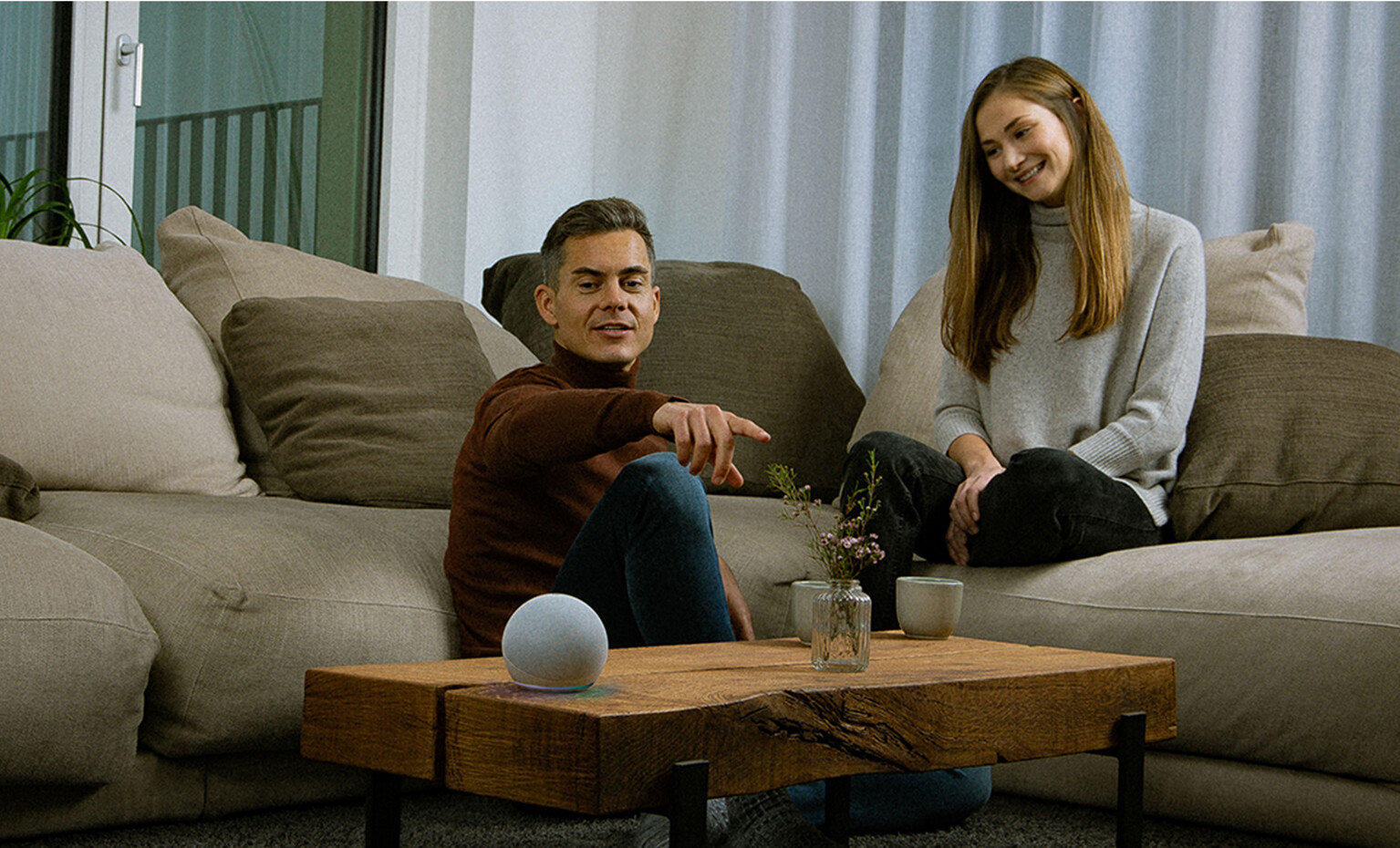

This is not a problem per se but here comes the but: let’s say you have a dozen people working on a Voice Assistant or any AI driven technology and they are a homogenous crowd. They therefore most probably share extensive parts of their cultural understanding, a common language and believe system.

Their learned Biases amplify and leave a mark in their work as they are the ones selecting training data, training algorithms, and performing pre and postprocessing of data. We know that women in tech make only for 25% in Europe while accounting for 51% of the overall population and an astounding only 1% of Hispanic women in the US holding computing occupations when making up 17% of the US’ population in total.